The Vietnamese Government has issued its first Draft Law on Artificial Intelligence (AI Law), marking the nation’s initial concrete step toward legally controlling Artificial Intelligence (AI) development. The issuance of this AI Law positions Vietnam among the few countries globally with a dedicated act to manage AI development. This demonstrates the Government’s proactive adaptation and effort to make Vietnam a promising land for AI projects in this new era.

The Draft AI Law establishes regulations for managing and controlling AI systems based on risk. This article summarizes several key provisions in the current Draft AI Law concerning the classification of AI systems.

1. Classification of AIsystems byrisk level

According to the Draft AI Law, AI systems are classified into the following risk levels:

(i) Unacceptable risk: An AI system capable of causing serious, irreparable harm to human rights, national security, public order, and social safety, or one used to commit severely prohibited illegal acts.

(ii) High risk: An AI system likely to cause significant harm to the life, health, legal rights, and interests of individuals, organizations, or other important public interests.

(iii) Medium risk: An AI system that is not classified as unacceptable or high risk, but poses a risk of causing deception, confusion, or manipulation of users during use due to the user’s unawareness of the system’s AI nature or the content it generates, unless it is obvious in the context of use.

(iv) Low risk: An AI system that does not fall into the unacceptable, high, or medium risk categories.

Before being placed on the market or deployed, the provider or deployer is responsible for self-classifying the AI system according to the criteria and governmental guidance stipulated in this Law; preparing, retaining technical documentation; and being legally liable for the results of the self-classification. If the risk group cannot be definitively determined, the provider may submit a request for consultation and written confirmation, along with the technical dossier, to the standing agency of the National Committee on Artificial Intelligence for guidance and confirmation.

The Government shall issue the criteria, assessment methods, and procedures for periodically updating the risk levels of AI systems.

2. Principles forclassification and managementof AI systems

The classification and management of AI systems must adhere to the following key principles:

a)Risk classification must be based on scientific evidence and verifiable data, considering the system’s degree of autonomy, learning capability, and scope of impact, consistent with the technology’s nature, intended use, andreasonably foreseeable Classification must simultaneously consider the intended use and the consequences or impacts that may arise.

b)Risk classification must be based on criteria that include both qualitative and quantitative factors, ensuring transparency and consistency in application.

c) Criteria, classification methods, and risk assessment tools must be periodically updated in line with technological developments and practical management requirements.

d)Management measures must becommensurate with the risk level. Conformity assessment confirmation prior to market placement is only required for systems posing a clear and serious risk of harm; all other cases apply a mechanism of post-market surveillance and assessment.

e)The results of assessment, certification, or recognition of conformity granted by competent authorities or recognized under specialized law, international standards, or international treaties to which Vietnam is a member, shall be recognized as equivalent when considering compliance with the provisions of this Law.

f)In the event of significant changes in the intended use, scope of impact, or when the High-Risk System list is updated, organizations and individualsare responsible for reviewing and reclassifying as guided by the competent authority.

3. Prohibition onunacceptable-risk AIsystems

AI systems falling into the unacceptable risk group are prohibited from being placed on the market or put into use in any form. Unacceptable-risk AI systems include those used to commit acts prohibited by law and the following cases:

a)Deliberately manipulating humanperception and behavior to undermine autonomy, leading to physical or psychological harm.

b)AI systems used by state agencies, organizations, or businesses toestablish individual social credit scoring, leading to unfavorable or unfair treatment in unrelated social contexts.

c)Exploiting the vulnerabilities of specific groups (based on age, disability, socio-economic circumstances) to influence behavior in a way that causes harm to themselves or others.

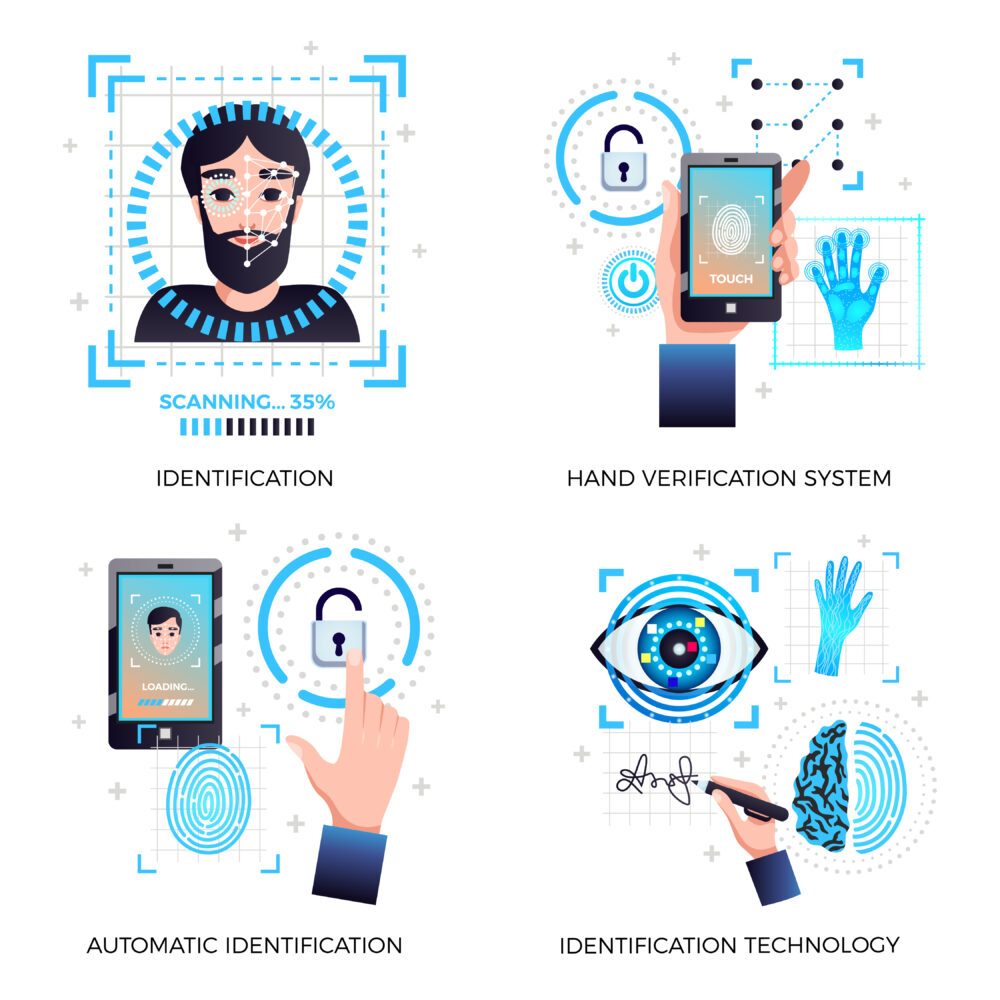

d)Developing and exploiting large-scale facial recognition databases through non-consensual collection of facial images from the internet or surveillance camera systems in an illegal manner.

e)Producing or disseminatingdeepfake content generated by AI systems that is likely to cause severe harm to public order, social safety, or national security.

f)Developing or using AI systems to undermine the State of the Socialist Republic of Vietnam.

4. Management mechanism for high and medium-risk systems

For high-risk AI systems: Before being circulated or deployed, or when there are significant operational changes, they must undergo a conformity assessment according to Article 18 of the Draft AI Law, and be subject to inspection and examination by competent state agencies during operation.

The Draft AI Law proposes two forms of conformity assessment for high-risk AI systems:

a) Certification of conformity before circulation or deployment: An authorized or recognized organization conducts the conformity assessment and issues a certificate.

b) Surveillance of conformity after circulation or deployment: The provider self-assesses conformity and is legally liable for the accuracy and veracity of the assessment results.

Organizations and individuals with systems assessed for conformity are responsible for maintaining conformity throughout the provision and deployment process. The competent authority has the right to suspend or revoke the certificate and conformity mark if the system no longer meets the requirements or involves violations.

Providers and deployers of high-risk AI systems must register the system on the national single-window portal for artificial intelligence before deployment or market placement.

Furthermore, providers of high-risk AI systems must establish an automatic technical mechanism for logging system activities to facilitate tracing, safety assessment, periodic testing, identification of incident causes, and liability attribution.

For medium-risk ai systems: Organizations and individuals deploying these systems must comply with regulations on transparency, labeling, and general obligations specified in Article 12 of the Draft. The State applies a mechanism of inspection and supervision. Foreign enterprises or organizations providing medium-risk AI systems to users in Vietnam must appoint a legal representative or contact point in Vietnam to fulfill coordination, reporting, and legal liability obligations under this Law.

Conclusion

Vietnam’s first Draft AI Law establishes a comprehensive risk-based legal framework, ranging from the strict prohibition of unacceptable-harm systems to the application of self-classification and conformity assessment mechanisms for high and medium-risk AI. These provisions demonstrate Vietnam’s commitment to controlling and promoting AI development responsibly, ensuring safety and transparency, while simultaneously positioning the nation as an attractive destination for technological innovation.

Date Written: 20/10/2025

Disclaimers:

This article is for general information purposes only and is not intended to provide any legal advice for any particular case. The legal provisions referenced in the content are in effect at the time of publication but may have expired at the time you read the content. We therefore advise that you always consult a professional consultant before applying any content.

For issues related to the content or intellectual property rights of the article, please email cs@apolatlegal.vn.

Apolat Legal is a law firm in Vietnam with experience and capacity to provide consulting services related to and contact our team of lawyers in Vietnam via email info@apolatlegal.com.